GovTech Singapore

During my internship at GovTech Singapore, I contributed to the redevelopment of the Singapore Government Design System (SGDS) and built a scalable backend for a task management app showcased at the STACK conference (4,000+ attendees).

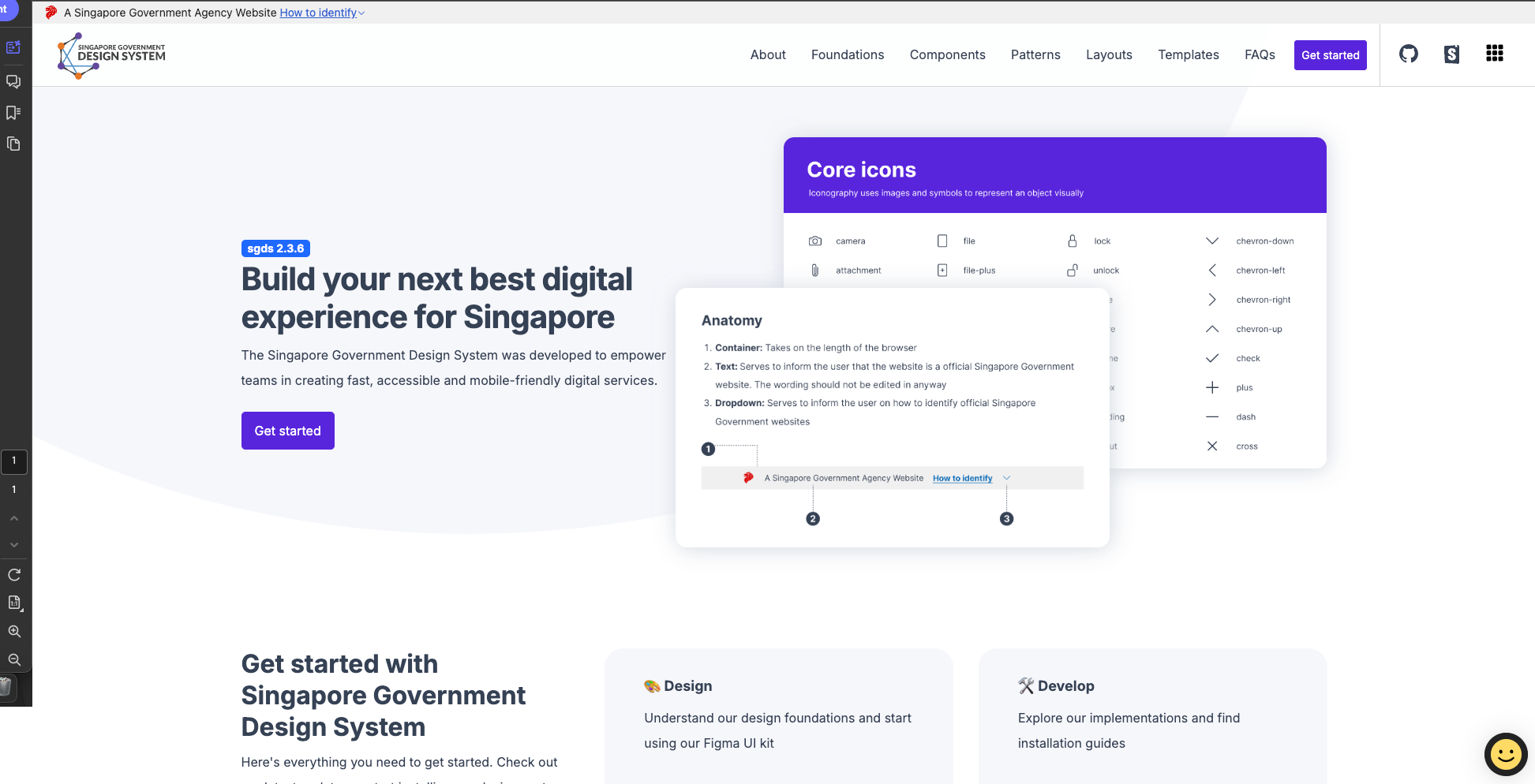

Singapore Government Design System (SGDS)

- Redeveloped 15+ web components using HTML, CSS, and JavaScript, improving client feedback scores by 34% during the migration from SGDS v2.0 to v3.0.

- Utilized the Shadow DOM for style encapsulation, ensuring components were isolated and unaffected by external styles. This allows web components, which are custom HTML tags, to be framework agostic (and can be used with no framework.)

- Used the Lit library to simplify web component creation and efficiently manage Shadow DOM encapsulation.

SSR Challenges and Solutions

Web components built with Shadow DOM present challenges with Server-Side Rendering (SSR):

- Custom elements rely on JavaScript, and functionality is only applied after client-side JS execution, causing Flash of Unstyled Content (FOUC).

- Shadow DOM does not render server-side, because it is a browser API leaving HTML without styles until the browser processes JS.

To mitigate FOUC, we explored:

- WASM web components, which use WebAssembly (binary instruction format that allows low level languages like C++ or Rust to run on the web) are platform independent, and can run both in server and client side environments

- We concluded that this was a limitation of the Lit library and urged clients to use Client Side Rendering when required. To redo everything using Enhance WASM web components would not be feasible.

- Clients could use CSR for certain components (that require the usage of the web components) and SSR for other components. Frameworks like Astro.js that incorporate the island architecture would be a good solution.

Task Management App Demo

Built a scalable task management app that integrated SGDS web components. The app allowed users to manage tasks and receive notifications for deadlines. This project focused on performance optimization and scalability.

Tech Stack and Design Decisions

- FastAPI: Chosen for asynchronous capabilities with asyncio, integration with SQLAlchemy ORM (simplified DB access and Database abstraction using Python Objects), and Pydantic for strict type validation and serealisation, fast data handling, easy OpenAPI documentation and built in dependency injection (manage auth, db connections easily).

- Python's asyncio operates similarly to JavaScript's event loop. JavaScript is primarily single-threaded, though Node.js leverages additional worker threads via the libuv library for handling I/O operations. In JavaScript, the event loop works by first executing the call stack. Once it's empty, it processes the microtask queue (which handles promise callbacks like .then and .finally), and then it processes the macrotask queue (which includes tasks such as timeouts and API calls). Similarly, syncio uses an event loop to manage coroutines, enabling non-blocking I/O (e.g., DB queries) without locks, but not ideal for CPU-bound tasks due to single-threaded execution. FastAPI also runs on an asynchronous server, ASGI, while other Pythonic frameworks like Flask run on WSGI, a synchronous framework. This makes FastAPI capable of handling concurrent I/O better than Flask, hence it is better for scaling. CPU-bound tasks are another story though, multithreading is required.

- PostgreSQL: Used B-tree indexes to optimize queries while balancing performance.

- JWT Authentication: Stateless authentication enabled horizontal scaling without session management

overhead.

-

When a user authenticates, the server generates a JWT and sends it to the client, cryptographically signed

using a secret. For subsequent requests, the client includes the token (typically in the HTTP Authorization

header). Server verifies the token's signature using the same secret each time.

-

No session data is stored on the server, so there is no need for each client request to be directed to the

same sever instance (Sticky session management using a reverse proxy).

- RabbitMQ: Message broker that implements the AMQP protocol.

-

Producers send message to an exchange with a routing key, and the exchange routes messages to one or more

queues to process the messages based on binding keys.

- Consumers then subscribe to these queues to process the message.

-

Once a consumer successfully processes a message, it sends an ACK back to RabbitMQ. If processing fails,

message can be requeued for another attempt.

-

Chosen over Redis Pub/Sub as the latter is fast and lightweight but lacks message durability and

acknowledgement and reliability.

-

Chosen over websockets because they require stateful connections (sticky sessions). RabbitMQ decouples message

production and consumption, dont need sticky sessions. Better for horizontal scaling.

- Connection Pooling: Reuses existing connections instead of creating new ones for each request, reducing overhead and improving throughput.

- Prefetch Tuning: Limits the number of messages sent to a consumer at a time, preventing overload and ensuring faster message processing.

- Server-Side Events (SSE): Chosen over WebSockets for lightweight, unidirectional updates, reducing complexity and resource usage for task notifications. With SSE, there is a smaller need for sticky sessions when horizontally scaling as well, because its uni directional. SSE/ Websockets have to be used here because browsers dont typically support the AMQP Protocol out of the box.

- SSE is stateless and does not require sticky session since it is over HTTP Keep-Alive, which is inherently stateless, and does not maintain any specific state about the client. Websockets are stateful as server must maintain the state of the connection for thr duration of the session and is connection is interrupted, the server must be able to resume the session from where it left off.

- Redis: Cached results for high-frequency queries.

-

Redis is blazingly fast because it stores data entirely in RAM and a single threaded event loop that minimises

context switching overhead.

-

However redis is not to be used as a primary DB in my use case as it does not support ACID transactions and

indexes.

Technical Challenges and Solutions

- Indexing Design Decisions:

Added unique constraints on

usernameandemailfor data integrity. Chose composite non-clustered indexes (e.g.,assigned_user_id, status) to optimize query performance for frequent operations despite increased storage use. Avoided clustering due to high maintenance overhead and reliance on Redis caching for reducing database load.- Clustered Index: Determines physical order of data on disk but requires manual maintenance

via

CLUSTER. PostgreSQL allows only one per table. - Non-Clustered Index: Uses a separate data structure pointing to actual data, is automatically maintained, supports multiple indexes, and allows composite indexes (unlike clustered).

- Trade-offs: Indexes increase storage and write overhead (each update modifies all relevant indexes), so they should be limited to necessary queries.

- Advanced Indexing: Consider

GIN,GiST, orBRINfor full-text search, geometric data, or large datasets. - Future Scaling: Can implement partitioning (e.g., by

deadline) when tables grow large, horizontal (sharding) is good when you want to scan a subset of the data, while vertical sharding is good for when some columns require greater security than others or are accessed more frequently. - Performance Testing: Used

EXPLAIN ANALYZEto verify composite index speedups, balancing storage cost with query efficiency.

- Clustered Index: Determines physical order of data on disk but requires manual maintenance

via

- Redis Caching: Cached frequently queried data such as "tasks due this week" to reduce database load and improve response times. Avoided pre-warming due to the unpredictability of user-triggered actions.

- RabbitMQ with FastAPI: RabbitMQ consumption was handled on a separate thread to prevent blocking

the FastAPI event loop. This approach ensured that the following do not occur:

- Scenario A (Blocking): Starting to consume the `pika.BlockingConnection` on the main FastAPI thread would block the event loop, causing the HTTP server to freeze and rendering endpoints unresponsive.

- Scenario B (Async Misuse): `pika.BlockingConnection` is not asyncio-compatible. Wrapping blocking calls in `async` would still block the event loop, defeating the purpose of concurrency in FastAPI.

- Scenario C (Thread + queue.Queue): A blocking `queue.Queue` would still cause issues when used in the asynchronous FastAPI context (eg using await keyword) Instead, an `asyncio.Queue` was used for inter-thread communication, allowing the FastAPI SSE endpoint to process messages asynchronously without blocking the event loop. `asyncio.Queue` is inherently not thread-safe, hence have to use `asyncio.run_coroutine_threadsafe()`.

AWS and DevOps

- Terraform: Used Infrastructure as Code (IaC)to define and manage cloud resources via code.

-

Provisioned AWS resources with the

awsprovider, avoiding thedockerprovider since Kubernetes handled container orchestration. - Benefits of IaC: Enables reproducibility, version control, and reduces manual errors in infrastructure deployment.

- Terraform state file is often stored remotely (often in an S3 bucket) to enable locking and shared access.

-

Provisioned AWS resources with the

- Docker: Containerized the app for consistent deployment and portability.

- Used Docker volumes to manage stateful interactions with the database since containers are inherently stateless.

- Optimized Dockerfiles for faster builds and smaller image sizes:

- Multi-stage builds: Reduced final image size by separating build dependencies from runtime.

- Lighter base images: Used minimal images (e.g.,

alpine) to reduce attack surface and resource usage. - Efficient Docker caching: Ordered Dockerfile layers optimally to maximize cache reusability and speed up builds.

- Kubernetes (AWS EKS): Orchestrated containers for efficient scaling and resource management.

- In my microservices architecture, it manages and auto-scales Docker containers.

- Ensures availability by restarting failed containers and redistributing workloads if nodes fail.

- Handles load balancing across services for optimized performance.

- Used AWS EKS to provision the control plane (the “brain” of Kubernetes), with AWS managing the underlying servers, fault tolerance, and scalability.

- AWS ElastiCache: Leveraged the managed service to deploy Redis, improving reliability and response times with seamless integration into the AWS ecosystem.

- AWS RDS: Hosted PostgreSQL with built-in scalability, backups, and failover support.

Key Takeaways

- Learnt the ins and out of web components.

- Developed expertise in scalable system design and performance optimization.

- Understood trade-offs between technologies like SSE vs. WebSockets and how to use indexes for optimisation.

- Understood how threading and asynchronous programming works under the hood, escpecially with Python's GIL.

- Gained hands-on experience with AWS, Terraform, Docker, and Kubernetes for building scalable systems.